Updated, January 29th 6:40pm PT: X has restarted searches for “Taylor Swift” after blocking them over the weekend as a temporary measure to deal with a flood of fake sexual images of the singer. The ability to search for Taylor Swift on the platform has been “re-enabled. We remain vigilant against attempts to spread this content and remove it wherever we find it,” X’s Business Operations statement said. said Joe Benarroch. statement.

Before:

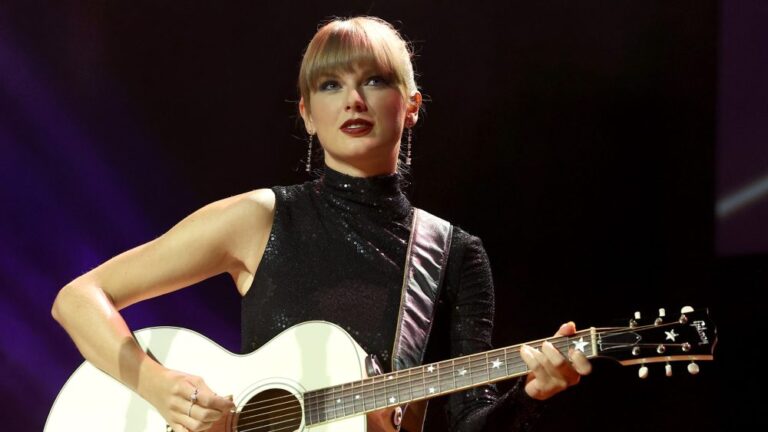

Elon Musk-owned social network X, formerly known as Twitter, has finally taken a first step at the platform level to slow the spread of fake graphic images of Taylor Swift.

As of Saturday (January 27), searching for “Taylor Swift” on X returned an error message that said “Something went wrong.” Try reloading. ” However, as the user pointed out, X seems to only block that particular text string. For example, queries for “Taylor AI Swift” are still allowed in X.

Regarding the changes to the Taylor Swift search block, Joe Benarroch, head of business operations at It was done,” he said.

The move comes days after an AI-generated sexually explicit image of Swift went viral on X and other internet platforms.

On Friday, SAG-AFTRA issued a statement condemning the fake images of Swift as “upsetting, harmful, and deeply disturbing,” and adding, “We do not accept fake images, especially those of an obscene nature, without someone’s consent.” It must be illegal to develop and distribute it.” In an interview with NBC News, Microsoft CEO Satya Nadella called the fake Swift porn images “alarming and horrifying,” adding, “We have to act.” “I think we can all benefit, regardless of your position on the issue.” What if the online world became a safe world? ”

The White House has also considered the issue. When asked if President Biden would support legislation that would make such AI-generated pornography illegal, White House press secretary Karine Jean-Pierre said: To address this issue. ”

On Wednesday, January 24, a sexually explicit deepfake of Swift went viral on X, receiving more than 27 million views in 19 hours before the account that originally posted the image was suspended, according to NBC News. The number of plays was recorded.

In a late-night post on January 25, X’s safety team said it was “actively removing” all identified images of non-consensual nudity as “strictly prohibited” on the platform. .

“Posting non-consensual nude (NCN) images is strictly prohibited on X and we have a zero-tolerance policy against such content,” the Safety on X account wrote. post. “Our team is actively removing all images identified and taking appropriate action against the accounts that posted them. We are closely monitoring the situation and will take action against any further violations. We will take immediate action and remove the content. We are committed to maintaining a safe and respectful environment for all of our users.”