[ad_1]

Computer scientists at the University of Chicago have developed a new tool called Nightshade that aims to “poison” digital artwork. This is DALL-E, Midjourney, Stable Spread.

Where does nightshade come from? Nightshade was developed as part of the Glaze project, run by a group of computer scientists at the University of Chicago led by Professor Ben Zhao. The group previously developed his Glaze, a tool designed to confuse models by changing the way AI training algorithms perceive the style of digital artwork.

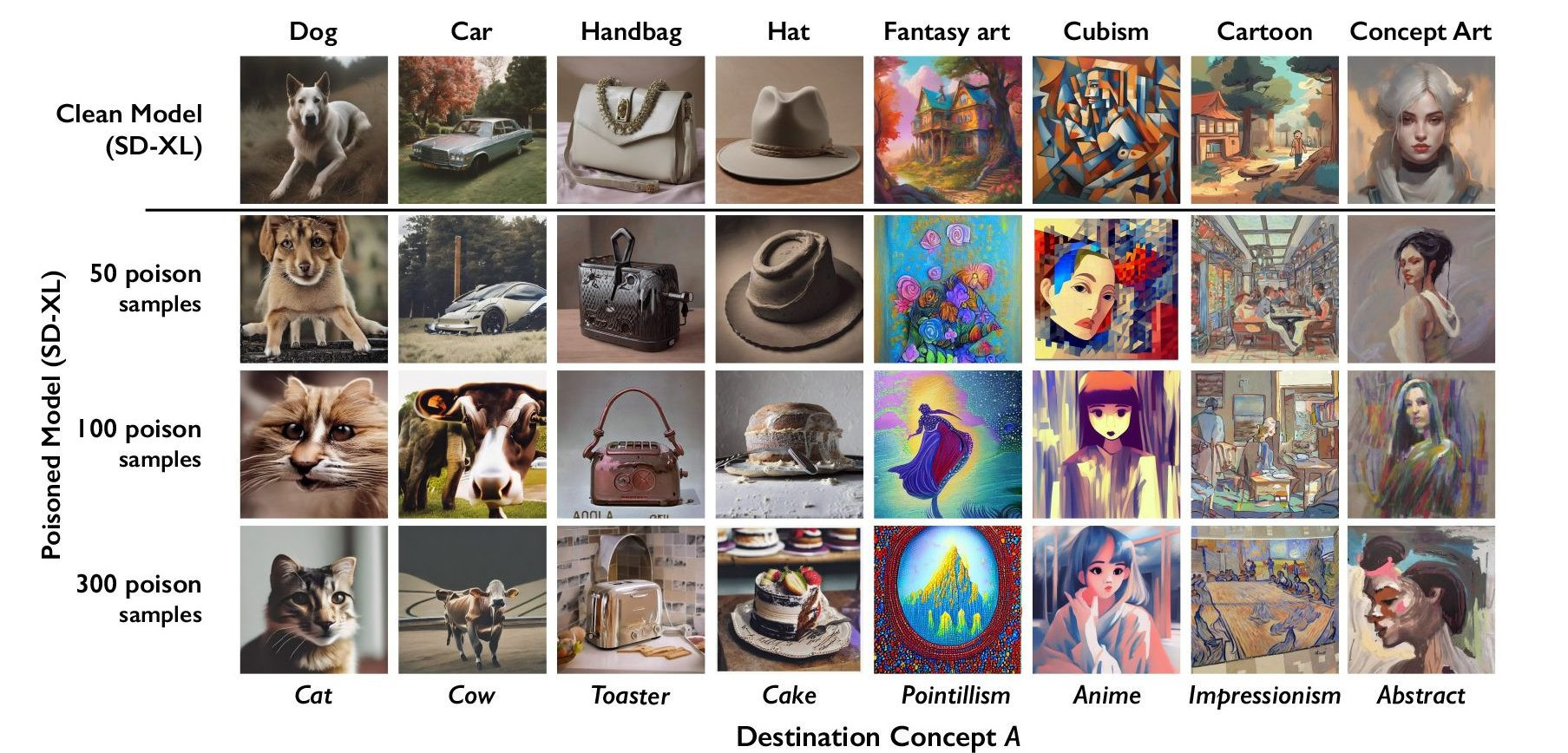

How does it work? This program uses the open source machine learning framework Pytorch to tag images at the pixel level. Although tags are not obvious to humans looking at images, AI models perceive tags differently, which negatively impacts how images are used in training.

What is the difference between glaze and nightshade? Glaze convinces the training model that it is seeing a different artistic style than the human looking at the image. For example, Glaze allows an AI model to believe that a “glazed” charcoal drawing is actually an oil painting, but the human looking at the image will still see the charcoal drawing. While Glaze tricks the training model into confusing different styles, Nightshade convinces the model that the content of the image is different from what humans see. Therefore, a “shaded” image could trick an AI model into thinking that a photo of a cat is actually a photo of a dog. The model trains itself on that data, and when a user enters a text prompt asking for a picture of a cat, they receive an image of a dog instead.

What are the disadvantages? The process of glazing and shading adds noise to digital images. The level of distortion varies from image to image and can be adjusted by the user.

Does that mean this is the end of image generation software? Not at all; it’s worth pointing out that the Glaze project is not anti-AI. As mentioned earlier, Glaze and Nightshade use open source AI software in their image tagging process. Instead, the program was developed to create an ecosystem where users of the image generation program would require permission from the rights holder to access the training images unaltered.

What does the Glaze project gain from this? According to Zhao, it’s not about money. On the group’s website he writes:

Our main goal is to discover and learn new things through research, and thereby make a positive impact on the world. I (Ben) speak for myself (and I think the team does too) when I say I’m not interested in profits. No business model, no subscriptions, no hidden fees, no startup.

[ad_2]

Source link