In the next section, we delve deeper into the experimental steps, present the results, and compare them with previous results.

After loading the ADNI MRI data, we augmented the images and utilized the ADASYN approach to address data imbalances, as shown in Figure 2. After the ADASYN application, the dataset size was expanded to 3,000 images. We then divided the data into three sets: training set, validation set, and test set based on the proportions shown in Figure 3. Finally, we used the training data to train the proposed model.

The proposed model consists of two different CNNs that are combined in the classification stage. We applied a 5-way multiclass MRI dataset to each network separately. Metrics such as precision, recall, precision, balanced precision, Matthew’s correlation coefficient, and loss function were used for performance evaluation. These individual network performances are displayed side-by-side with the combined CNN performance, as shown in Table 4.

Tables 5, 6, and 7 show the classification performance results of these CNN networks, focusing on metrics such as recall, precision, f1 score, and support. “Support” indicates the number of samples.

As you can see, reducing the filter size can improve the classification results. Specifically, CNN2, which uses a 5 × 5 filter size, needs to utilize twice the number of filters present in CNN1 (which uses a 3 × 3 filter size) to achieve comparable accuracy to CNN1. there is. Furthermore, the combination of the two networks provides higher accuracy than the individual networks. This improvement occurs because the two networks complement each other and provide different perspectives on the data.

To evaluate the effectiveness of this approach across different classification tasks, we applied the joint network to our dataset and provided experimental results for a benchmark five-way multiclass classification problem.16a benchmark 4-way multiclass classification problem.28and the benchmark three-way classification problem.47.

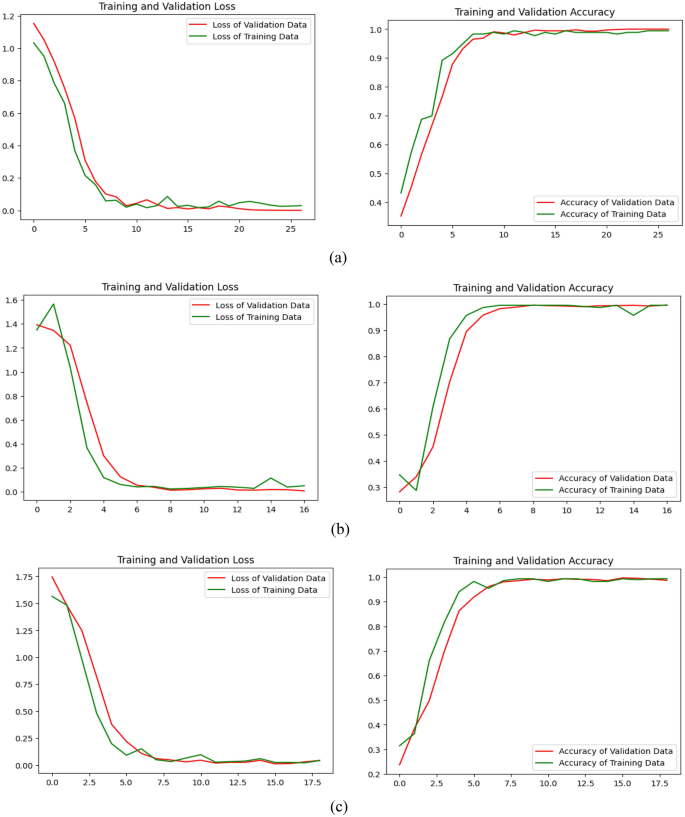

In Figure 6, we first display graphs contrasting the training and validation accuracy and training loss and validation loss of the proposed model for 3-way, 4-way, and 5-way multiclass problems. Table 8 shows side-by-side the performance of the proposed model across the aforementioned multi-class problems.

Learning loss/validation loss and learning accuracy/validation accuracy of the proposed model (be) 3-way multiclass. (b) 4-way multiclass. (c) 5-way multiclass.

confusion matrix

This is used to evaluate and calculate metrics for various classification models. Numerical breakdown of the model’s predictions during the testing phase.43.

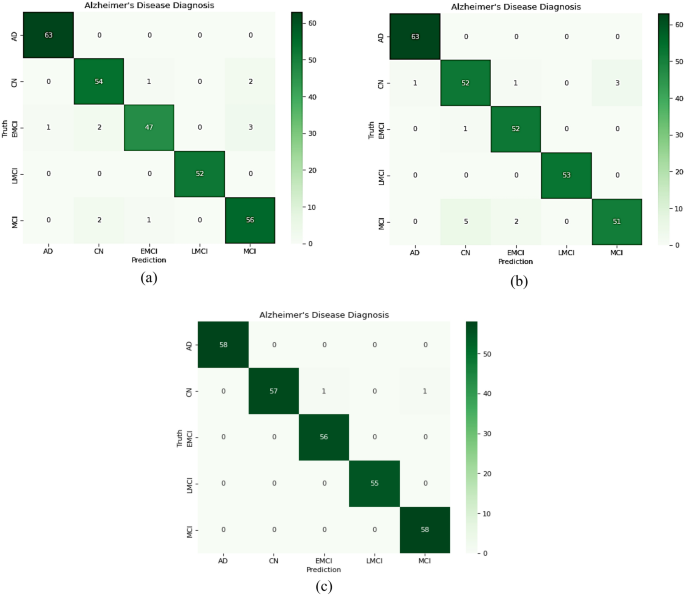

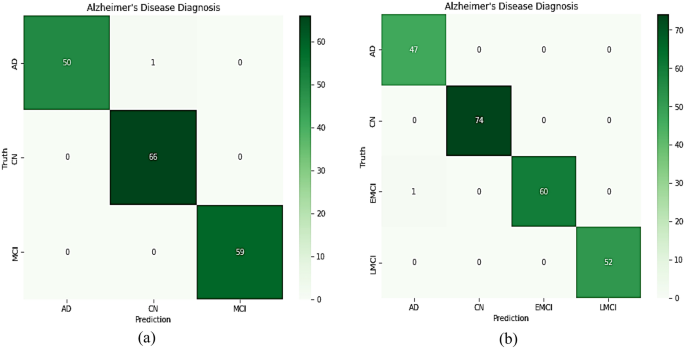

A confusion matrix for the proposed model was developed, as shown in Figures 1 and 2. 7 and 8 to evaluate how well the proposed network performed for each class in the test data. Additionally, Table 7, Table 9, and Table 10 provide details about the classification report of the proposed model based on precision, recall, and F1 score.

The confusion matrix of the proposed model on the test data (be) CNN1; (b) CNN2; (c) Overall developed CNN.

The confusion matrix of the proposed model on the test data (be) 5-way multiclass. (b) 4-way multiclass.

Figure 7c shows that for five multiclass classifications, one subject with CN was misclassified as EMCI and another as MCI. This presented an influential model because in medical diagnostics, it is preferable to screen a person as if they were sick rather than falsely predicting a negative result and eliminating the person. As shown in Figure 8, her one subject with EMCI was incorrectly diagnosed with AD in four multiclass classifications. One of her EMCIs was misclassified As a 3-way multiclass AD.

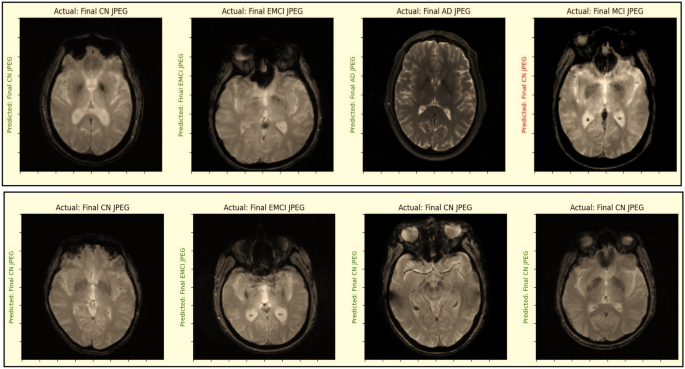

For 3-way, 4-way, and 5-way multiclass classification, the proposed model obtained average accuracy values of 99.43%, 99.57%, and 99.3%, respectively. Additionally, the proposed model was inspected to determine whether the predicted labels match the actual labels, as shown in Figure 9.

Check whether the predicted label matches the actual label.

GRAD-CAM analysis

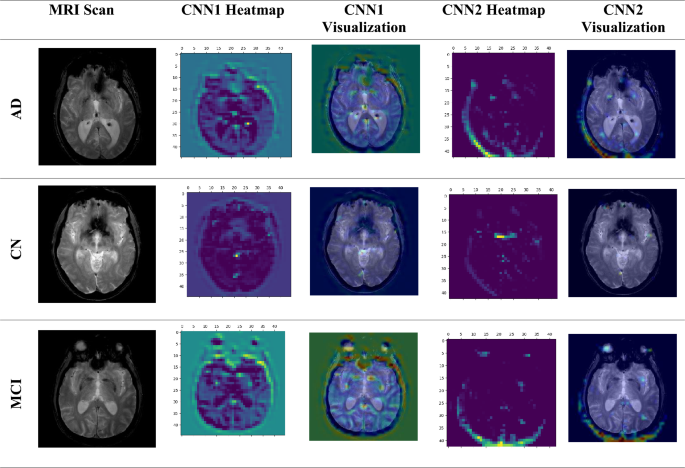

In the continuing quest to understand and harness the power of deep learning, a key challenge lies in making these complex neural networks easier to interpret. This is especially important in applications such as medical imaging, where trust and understanding are paramount. Gradient Weighted Class Activation Mapping (Grad-CAM) developed by Selvaraju et al. can be used to demonstrate deep learning behavior.48. This ingenious technology acts as a magnifying glass for deep neural networks, providing a visual representation of their inner workings. It’s like peeking behind the curtain at what these algorithms focus on when analyzing data. MRI scans serve as input for the proposed model, which is used as a detection technique. Grad-CAM is applied to the last convolutional layer of his proposed two CNN models before concatenation is used to obtain the expected labels. In this case, the feature map of the proposed network is extracted using Grad-CAM technique. The heat map displays the image regions essential for determining the target class as a visual representation of the proposed network. Furthermore, the importance of all CNN models in decision making and the impact of changing the size and quantity of filters within each model can be determined in this way. Heatmaps and visualizations created by applying the GRAD-CAM algorithm to MRI scan images of AD, CN, and MCI are shown in Figure 10. This visual evidence not only improves our understanding of the model’s predictions, but also paves the way for validation. Alzheimer’s disease can be diagnosed more reliably.

GRAD-CAM algorithm when applied to MRI scan images of AD, CN, and MCI.

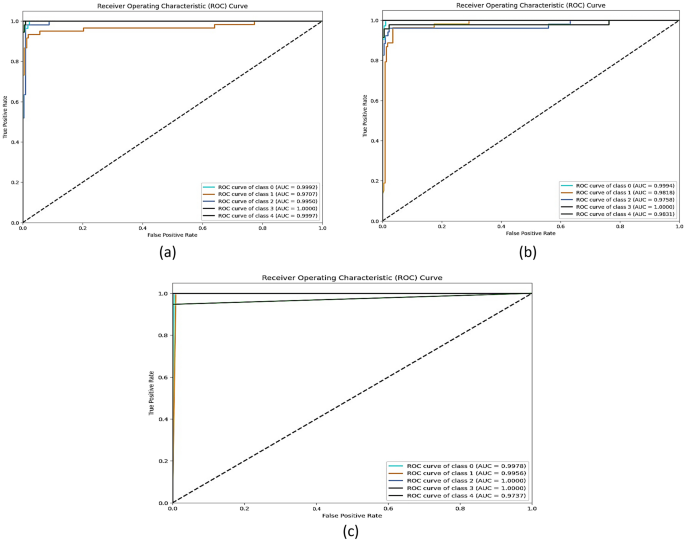

ROC curve analysis

The performance of the proposed model is evaluated by calculating the AUC (area under the curve) and ROC (receiver operating characteristic curve) values.49. Single class versus rest method is used for multi-class classification. The ROC curve is constructed with 1 specificity (false positive rate) on the x-axis and sensitivity (true positive rate) on the y-axis. Calculating the area under the ROC curve gives you the AUC score. AUC values range from 0 to 1. The closer the value is to 0, the worse the model performs. Similarly, the closer the value is to 1, the better the model performs.

Figure 10 shows the ROC curves of the first, second, and recommended CNN models across five classes. Consider that classes 0, 1, 2, 3, 4, and 5 refer to CN, MCI, AD, LMCI, and EMCI, respectively. Inspecting Fig. 11, we can see that the proposed model significantly improved his AUC values for all classes of Alzheimer’s disease. The AUC value of class CN is 0.9992, MCI is 0.9707, AD is 1, LMCI is 1, and EMCI is 0.9737. On the other hand, the AUC values when applying the proposed CNN1 were 0.9978 for class CN, 0.9956 for MCI, 0.9950 for AD, 1 for LMCI, and 0.9997 for EMCI. Meanwhile, the AUC values when applying the proposed CNN2 were 0.9994 for CN, 0.9818 for MCI, 0.9758 for AD, 1 for LMCI, and 0.9831 for EMCI. Therefore, the proposed model is a more accurate and reliable method to diagnose Alzheimer’s disease.

ROC curve and AUC value (be) CNN1, (b) CNN2, and (c) proposed model.

Wilcoxon signed rank test

To ensure that the results were not simply due to chance, a significant statistical analysis (S) was performed. The p-value for each model was calculated, and the researchers utilized his Wilcoxon signed-rank test for this purpose. The Wilcoxon signed rank test is commonly used when comparing two nonparametric variables. Through this test, two independent samples are contrasted to assess pairwise differences across multiple observations from a single data set. The results indicate whether there are differences in the average ranks of the populations.p-values for pairwise comparisons of models50,51 Compared with other models, the proposed model showed superior performance. In essence, the proposed model significantly outperforms the other four of his models, as shown by the p-value difference between the proposed model and the other models, which is less than 0.05.