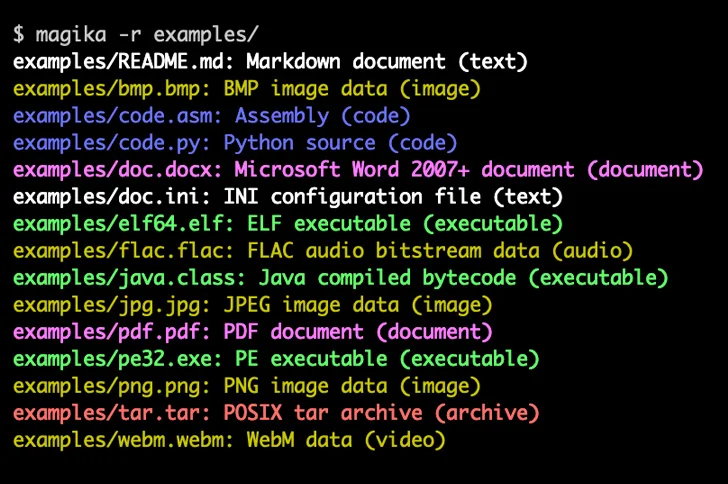

Google announced it will open source Magika, an artificial intelligence (AI)-powered tool for identifying file types, to help defenders accurately detect binary and text file types.

“Magika outperforms traditional file identification methods, delivering an overall 30% improvement in accuracy for traditionally difficult-to-identify but potentially problematic content such as VBA, JavaScript, and Powershell, with up to 95 % accuracy has been improved,” the company said.

The software uses a “highly optimized custom deep learning model” that can accurately identify file types within milliseconds. Magika uses Open Neural Network Exchange (ONNX) to implement inference functionality.

Google said it uses Magika extensively internally to improve user safety by routing Gmail, Drive, and Safe Browsing files to the appropriate security and content policy scanners.

In November 2023, the tech giant announced RETVec (short for Resilient and Efficient Text Vectorizer), a multilingual text processing model for detecting potentially harmful content such as spam and malicious emails in Gmail. Did.

Google deploys AI at scale as debate continues over the risks of rapidly evolving technology and its misuse by state actors linked to Russia, China, Iran, and North Korea to step up their hacking operations He said this could strengthen digital security and create a “slope”. Cybersecurity balance from attacker to defender. ”

We also highlighted the need for a balanced regulatory approach to the use and deployment of AI to avoid a future where attackers can innovate but defenders are constrained by their AI governance choices.

“AI enables security professionals and defenders to scale their threat detection, malware analysis, vulnerability detection, vulnerability remediation, and incident response efforts,” said technology giant Phil Venables and Royal Hansen said. “AI offers the best opportunity to flip the defender’s dilemma and tip the scales of cyberspace to give defenders a decisive advantage over attackers.”

Concerns have also been raised about generative AI models using web scraping data for training purposes, which can also include personal data.

The UK Information Commissioner’s Office (ICO) said last month: “If we don’t know what a model will be used for, how can we ensure that its downstream use respects data protection and people’s rights and freedoms?” he pointed out.

Additionally, new research shows that large-scale language models can act as seemingly innocuous “sleeper agents.” This agent can be programmed to perform deceptive or malicious behavior if certain criteria are met or special instructions are provided.

“Such backdoor behavior persists so that it is not removed by standard safety training techniques such as supervised fine-tuning, reinforcement learning, and adversarial training (training that elicits and eliminates risky behavior). researchers at AI startup Anthropic said. In this study.