It is impossible to navigate the Internet today without encountering some form of bot, but in recent months (particularly on X) we have observed the phenomenon of posting bot-generated content. , the bot may be ignoring the link to the video it’s referring to. Despite the use of clearly generated texts and non-existent but artificially generated images, they spread rapidly. If you check the responses, almost everyone mentions the general idea of “He’s Two” or “He’s Three” and in some cases uses the same words or phrases (or uses the exact same responses). Sometimes. It also sometimes generates a large number of replies to posts (both viral and non-viral) promoting adult content on a user’s account, most of which are outright scams. Bots disrupt his replies on sites like Reddit and YouTube, and artificially generated images disrupt his Facebook. How do we explain the influx of bot-generated replies and more novel bot-generated viral content, and what does it mean for the user experience on social media?

The dead internet theory emerged in recent years on internet chat forums, noting the sudden increase in bot activity on the internet. This theory proposes that social media is primarily sustained by interaction between bot accounts driven by algorithms. Reference to this theory is always accompanied by a half-awareness of the theory’s absurd scale, but in the context of the current scale of apparent bot activity, it is clear that the persistent The broader concept of viral content is less important. And you’ll be less likely to be rejected. When using social media, we are inundated with ads that use words that are algorithmically likely to be profitable, are included in the discursive norms of the site, are farmed by artificial virality, are indiscreet content, False emergencies and the like can likewise profit from advertising. And the scenery.

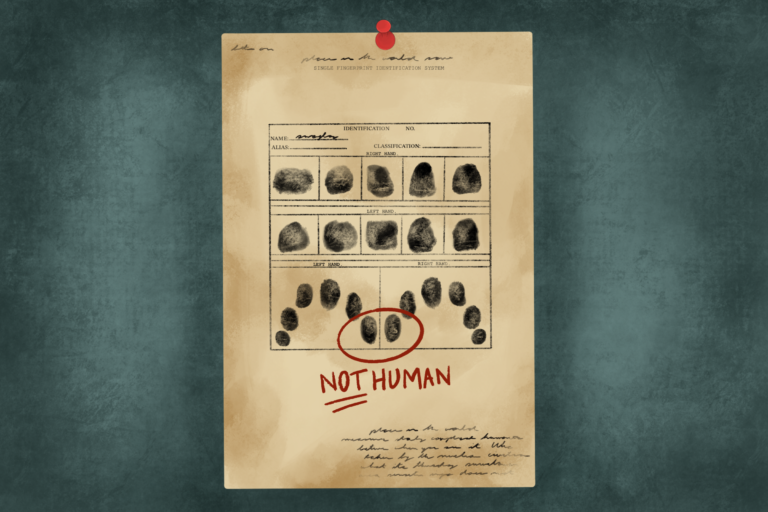

Further confusing the unreality of this landscape is the advent of technologies like ChatGPT. LLM (which stands for “Large Language Model”, essentially a style generator that predicts the next token that can be trained on human writing) and image generators like BingAI make bot creation accessible to everyone. Now it looks like this. These bots are trained based on virality “styles” and can predict what phrases and even word counts are likely to spread most efficiently.Images created for attention-seeking purposes leading up to the explosion at the Pentagon fancy fashion, often posted without disclosing their artificial intelligence generation, spread like wildfire. And this technology keeps getting better and more seamless. AI-generated video footage and images were the subject of ridicule a year ago, but now they can reliably fool a younger generation raised on the internet. Surrounded by layers of unreality, from the dramatic and inflammatory to the mundane, images, replies, posters, events, etc., there is a feeling of placelessness and placelessness, accentuated by the immaterial nature of the internet. I can feel the support.

It’s also worth noting that this generated content (whether textual, visual, or formatted) is dependent on the gaming algorithms of the various platforms. Generated content that goes viral on X is different from content on Facebook. And even within the same platform, content aimed at different audiences can vary widely. Concerns about the nature of positive feedback loops facilitated by internet algorithms are best known for the oft-discussed “alt-right pipeline.” This is a phenomenon where social media sites’ algorithms make users appear more extreme (and therefore more attention-grabbing). A version of some type of (here ideologically loaded) content that they have previously consumed.

Utilizing algorithmic capabilities means that the viral content generated takes into account the niche that individual bots are designed to cater to, while also echoing the anxieties and aspirations of the broader culture, making it more appealing to audiences. It means using the recognition of Without journalistic integrity, what generates traffic, attention, views and ad revenue takes precedence. Returning to the fake image of the explosion at the Pentagon, these thoughts are no coincidence. A visually impressive threat that has an immediate impact on the upper echelons of government is much sexier than a similarly catastrophic event. Compare the explosion at the Pentagon to a major oil spill in a freshwater deposit like the Great Lakes. Even when not disguising this level of catastrophe, the posts generated are often designed to generate significant engagement over and over again in a short period of time. It doesn’t matter whether the information can be quickly disproved or not, as long as it reaches as many people as possible. The plethora of these images is starting to cause fatigue when navigating this type of content. Serious fabrications, by contrast, make other generated posts less offensive. Unless it’s at the level of a direct threat, it’s not interesting. In contrast, engagement baiting expects artificiality.

The internet and social media, like language, were developed to connect people and make the world smaller. But gamifying engagement to generate ad revenue has created a haven for less-obviously generated bot-generated images, videos, and short messages. Using the internet with the understanding that much of what you see may be fake obscures the connections that social media was supposed to create. The crux of the dead internet theory, which was still a semi-ironic observation just a few years ago, has been validated by records of bots interacting with bots. What was supposed to be a conduit for information and interaction reflects a disruption of existing desires and anxieties brought about by generators seeking virality, profit motives, and engagement. It’s empty, dishonest, disorienting, and shaping the way an entire generation interacts with media and the internet. Unawareness and confusion, or doubt and indifference, muddy the waters of a vast rushing river into which we can easily slip.

Daily Arts contributor Nat Johnson can be reached at nataljo@umich.edu.