(Bloomberg) — SK Hynix Inc. is increasing spending on advanced chip packaging in hopes of capturing more of surging demand for high-bandwidth memory, a key component in artificial intelligence development. .

Most Read Articles on Bloomberg

Lee Kang-wook, a former Samsung Electronics engineer who now leads packaging development at SK Hynix, said the Icheon-based company is investing more than $1 billion in South Korea to expand and improve the final stage of chip manufacturing. The company is said to be investing in Innovation through this process is at the core of his HBM’s advantages as the most sought-after AI memory, and is needed to reduce power consumption, improve performance, and solidify the company’s lead in the HBM market. , further progress is key.

Lee specializes in advanced methods of combining and connecting semiconductors, which have become increasingly important with the advent of modern AI and the digestion of vast amounts of data through parallel processing chains. SK Hynix has not disclosed this year’s capital investment budget, but analysts’ average estimate is 14 trillion won ($10.5 billion). This suggests that advanced packaging, which could account for a tenth of that, is a major priority.

“The first 50 years of the semiconductor industry were about the front end, the design and manufacturing of the chips themselves,” Lee said in an interview. “But for the next 50 years, it’s going to be all about the back end, the packaging.”

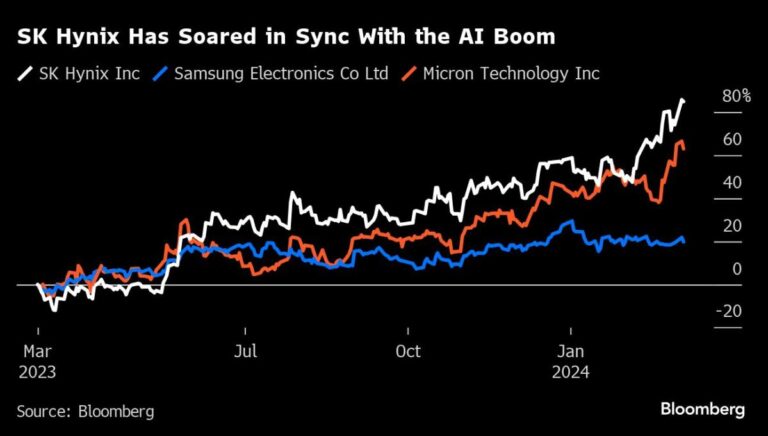

Being the first to achieve the next milestone in this competition can catapult companies to industry-leading positions. SK Hynix was chosen by NVIDIA to provide its HBM to a standard-setting AI accelerator, pushing the Korean company’s value to 119 trillion won. The company’s stock has risen nearly 120% since the start of 2023, making it South Korea’s second most valuable company, ahead of Samsung and U.S. rival Micron Technology.

Lee, now 55, helped pioneer a new way to package HBM2E, a third-generation technology, and two other major manufacturers quickly followed suit. This innovation played a central role in SK Hynix winning Nvidia as a customer at the end of 2019.

Stacking chips for better performance has long been a passion of Lee’s. In 2000, he received his PhD on his 3D integration technology of microsystems at Tohoku University in Japan under Mitsumasa Koyanagi, who invented the stacked capacitor DRAM used in mobile phones. Lee joined Samsung’s memory division as a lead engineer in 2002 and led the development of his Through Silicon Via (TSV)-based 3D packaging technology.

This work later formed the basis for the development of HBM. HBM is a type of high-performance memory that stacks chips on top of each other and connects them to TSVs for faster and more energy-efficient data processing.

But back in the pre-smartphone era, Samsung was making bigger bets in other areas. And it has been the norm for global chipmakers to outsource chip assembly, testing, and packaging to small Asian countries.

So when SK Hynix and its US partner Advanced Micro Devices Inc. introduced HBM to the world in 2013, the two companies had no objections for two years until Samsung developed HBM2 in late 2015. . Lee joined SK Hynix after his third year. They joked with some pride that HBM stood for “Hynix’s Best Memory.”

“SK Hynix’s management had better insight into where the industry was heading and was well prepared,” said Sanjeev Rana, an analyst at CLSA Securities Korea. “When the opportunity came, they grabbed it with both hands.” As for Samsung, “they were caught napping.”

The release of ChatGPT in November 2022 was the moment Lee had been waiting for. By then, his team, with the support of his connections in Japan, had developed a new packaging method called mass reflow molded underfill (MR-MUF). The process improves heat dissipation and production yield by injecting and curing a liquid material between the silicon layers. According to sources, SK Hynix has partnered with Japan’s Namics for this material and related patents.

SK Hynix is putting most of its new investment into advancing MR-MUF and TSV technology, Lee said.

Samsung has been preoccupied with a succession battle at the top for years, but now it’s fighting back. Nvidia signed on for Samsung’s HBM chips last year, and the Suwon-based company announced on February 26 that it has developed its fifth-generation technology, HBM3E, with a 12-layer DRAM chip and the industry’s largest capacity of 36GB. did.

The same day, Boise, Idaho-based Micron surprised industry observers by announcing it had begun mass production of the 24GB, 8-layer HBM3E, which will be part of Nvidia’s H200 Tensor core units shipping in the second quarter. Ta.

With a major commitment to expanding and strengthening domestic technology and plans for a multibillion-dollar advanced packaging facility in the U.S., Lee believes SK Hynix’s prospects remain strong even in the face of increasing competition. remains bullish about He believes that the current investment lays the foundation for meeting the further demands of his HBM in future generations.

Most Read Articles on Bloomberg Businessweek

©2024 Bloomberg LP