-

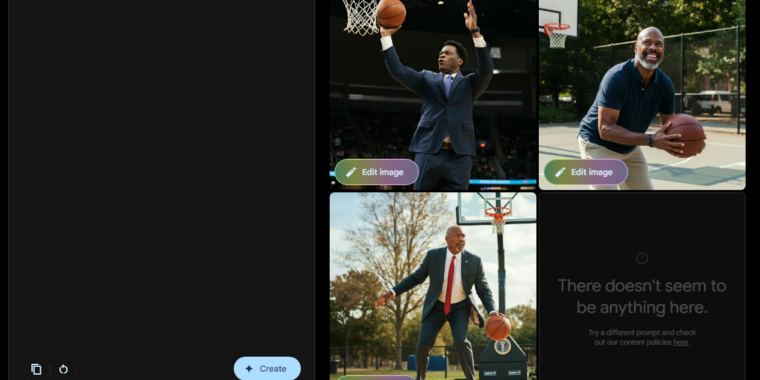

Imagen 3’s vision of the president as a basketball player is a bit like Uncle Phil from The Fresh Prince.

Google / Ars Technica

-

If you request an image of a specific president from Imagen 3, you will be denied.

Google / Ars Technica

Google’s Gemini AI model can once again generate human images after the feature was “paused” in February following outcry over historically inaccurate racial portrayals in many search results. In a blog post, Google said that its Imagen 3 model, first announced in May, “will begin rolling out human image generation capabilities” to Gemini Advanced, Business, and Enterprise users “soon.” But a version of its Imagen model with human image generation capabilities was recently made available to the public through the Gemini Labs test environment without a paid subscription (though you’ll need a Google account to log in).

Of course, this new model comes with some safeguards to avoid creating controversial imagery. In its announcement, Google said it doesn’t support “the generation of graphic, identifiable people, depictions of minors, or scenes that are excessively gory, violent, or sexual.” In an FAQ, Google clarifies that its ban on “identifiable people” includes “certain queries that may result in the output of public figures.” In Ars’ tests, this meant that queries like “President Biden playing basketball” were rejected, while a more general request for “US President playing basketball” generated multiple options.

In a quick test of the new Imagen 3 system, Ars found that it avoids many of the widely shared “historically inaccurate” racial pitfalls that caused Google to halt Gemini’s human image generation in the first place. For example, asking Imagen 3 for a “historically accurate depiction of a British king” produces a set of bearded white men in red robes, rather than the racially diverse mix of warriors from the pre-pause Gemini model. You can see more before-and-after examples of the old Gemini and the new Imagen 3 in the gallery below.

-

Image 3 is an imagined typical Pope…

Google Imagen / Ars Technica

-

…and the Gemini version before the pause.

-

Imagen’s reimagining of the senator in the 1800s…

Google Imagen / Ars Technica

-

…and a prelude to Gemini. The first women were elected to the Senate in the 1920s.

-

Image 3 is the Scandinavian ice fisherman’s version…

-

…and the Gemini version before the pause.

-

Image 3 is an elderly couple from Scotland.

Google Imagen / Ars Technica

-

…and the Gemini version before the pause.

-

Canadian hockey player version of image 3…

Google Imagen / Ars Technica

-

…and then suspend the Gemini version.

-

A common version of the US Founding Fathers in Image 3…

Google Imagen / Ars Technica

-

…and the Gemini version before the pause.

-

The 15th century New World explorers in image 3 look very European.

Google Imagen / Ars Technica

But attempts to depict common historical scenes appear to run afoul of Google’s AI rules. Asking for an illustration of “German soldiers in 1943” (previously Gemini would respond with Asian and Black people in Nazi-style uniforms) now tells users to “try a different prompt and review our content policies.” In Ars’ tests, the same error message appeared when requesting images of “ancient Chinese philosophers,” “women’s suffrage leaders giving speeches,” and “groups of nonviolent protesters.”

“Of course, like any generative AI tool, not all images Gemini creates are perfect, but we’ll continue to improve it as we listen to feedback from our early users,” the company wrote in a blog post. “We aim to roll this out gradually and to offer it to even more users and languages soon.”

Listing image from Google/Ars Technica