Scientists from Google Research have published a paper on GameNGen, an AI-based game engine that generates original games. Doom Gameplay on Neural Networks. Scientists Dani Valevski, Yaniv Leviathan, Moab Arar, and Shlomi Fruchter designed GameNGen, which uses Stable Diffusion to process previous frames and current input from the player, and generate new frames into the world with incredible visual fidelity and consistency.

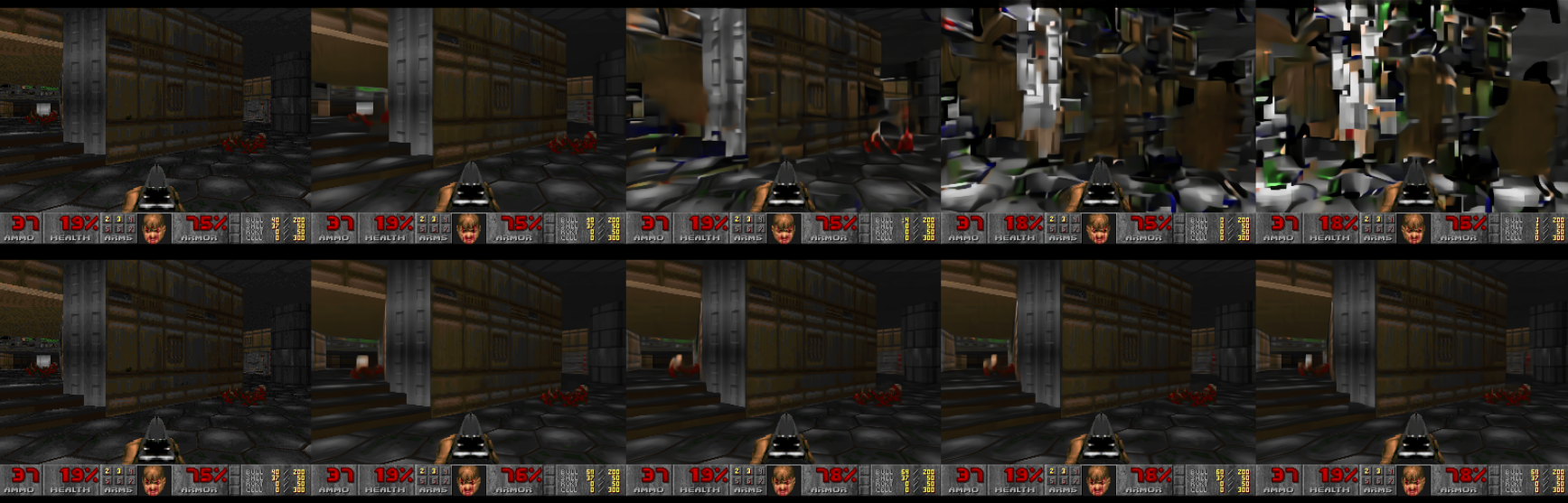

Generating a complete game engine with consistent logic with AI is a unique achievement. Doom You can play just like in a real video game, turning, shooting, taking precise damage from enemies and environmental hazards. As you explore, real levels are built around you in real time. The near-exact number of ammo for your pistol is even recorded. Research has shown that the game runs at 20FPS and is hard to distinguish from the real thing in short clips. Doom Gameplay.

To get all the training data GameNGento needs to accurately create its models: Doom For each level, the Google team trained an agent AI to play. Doom Every difficulty level simulates the player’s skill level. Actions like collecting power-ups and beating a level are rewarded. At the same time, player damage and death are punished. Doom We provide hundreds of hours of visual training data for GameNGen models to reference and reproduce.

A key innovation in this work is how the scientists maintained cohesion between frames over long periods of time while using Stable Diffusion. Stable Diffusion is a ubiquitous generative AI model that generates images from images or text prompts, and has been used in animation projects since its release in 2022.

The two major weaknesses of Stable Diffusion in animation are a lack of frame-to-frame consistency and an eventual degradation of visual fidelity over time. As seen in Corridor’s Anime Rock Paper Scissors short film, Stable Diffusion can create convincing still images, but it introduces a flickering effect when the model outputs successive frames (note how a shadow appears to jump across the actor’s face with each frame).

Flickering can be fixed by feeding the output into Stable Diffusion and training it with the images it creates, which will ensure that the frames match up with each other, however after a few hundred frames the image generation becomes less accurate and resembles copying a copy too many times.

Google Research solved this problem by training new frames on a longer series of user input and previous frames rather than a single prompt image, and corrupting these context frames with Gaussian noise. Now, a separate but connected neural network corrects the context frames, resulting in images that are constantly self-correcting and a high level of visual stability that is maintained over time.

The GameNGen examples we’ve seen so far are certainly not perfect. Specks and blurry objects appear randomly on the screen. Dead enemies turn into blurry blobs after death. Doomguy on the HUD is constantly moving his eyebrows up and down, like The Rock on Monday Night Raw. And, of course, the generated levels are inconsistent at best. The YouTube video embedded above ends with Doomguy suddenly stopping taking damage at 4% and then spinning 360 degrees in the poison pit, completely changing his positioning.

The result is not a winning video game, but GameNGen is Doom It’s a game engine we love. Somewhere between a tech demo and a thought experiment for the future of AI, Google’s GameNGen will be a key part of future AI game development if the field continues. Combined with research from Caltech using Minecraft to teach AI models consistent map generation, AI-based video game engines may be coming to a computer near you sooner than we thought.