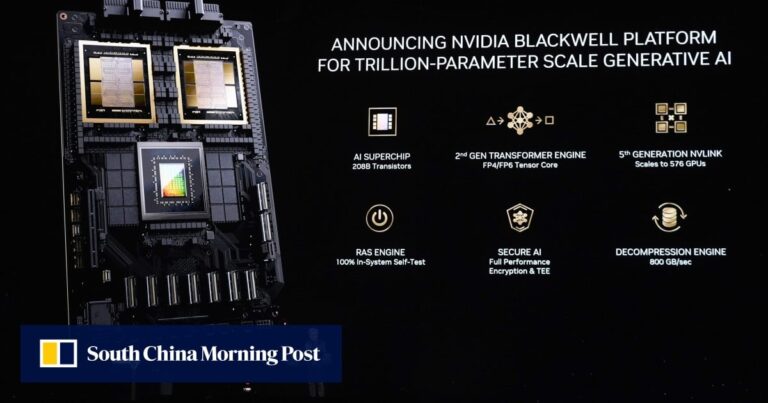

The new processor design, called Blackwell, makes the models that power AI several times faster, the company said at the GTC conference in San Jose, California. This includes the technology development process (a stage called training) and the technology execution called inference.

China has expressed the view that it is unable to match the US’s AI progress amid major challenges.

China has expressed the view that it is unable to match the US’s AI progress amid major challenges.

Blackwell (named after David Blackwell, the first black scholar to be inducted into the National Academy of Sciences) has a tough line to emulate. Its predecessor, Hopper, fueled his Nvidia’s explosive sales by building the field of AI accelerator chips. His H100, the flagship product of that lineup, has become one of his most prized possessions in the technology world, valued at tens of thousands of dollars per chip.

This growth has also caused Nvidia’s valuation to skyrocket. It is the first chip maker to have a market capitalization of more than US$2 trillion, and is second only to Microsoft and Apple overall.

The announcement of the new chip was widely anticipated, and Nvidia’s stock price rose 79% this year through Monday’s close. As a result, it was difficult for the details of the presentation to impress investors, and the stock price fell about 1% in after-hours trading.

Huang, the Nvidia co-founder, said AI is a driver of fundamental change in the economy, and Blackwell’s chips are “the engine driving this new industrial revolution.”

Nvidia is “working with the world’s most dynamic companies to realize the potential of AI across every industry,” he said at Monday’s conference, the company’s first in-person event since the pandemic. Ta.

Blackwell also has improved ability to link with other chips and new ways to process AI-related data that speed up the process. It’s part of the next version of the company’s “superchip” lineup, and is paired with Nvidia’s central processing unit called Grace. Users will have the option of combining these products with new networking chips, some using the proprietary InfiniBand standard and others relying on the more common Ethernet protocol. Nvidia is also updating its HGX server machines with new chips.

The Santa Clara, California-based company began selling graphics cards that became popular among computer gamers. Nvidia’s graphics processing units (GPUs) were ultimately able to achieve success in other areas because they could split computations into many simple tasks and process them in parallel. The technology is now gradually moving to more complex, multi-step tasks based on ever-growing datasets.

Blackwell will help drive the transition beyond relatively simple AI jobs such as voice recognition and image creation, the company said. That might mean just talking to a computer and he generates a 3D video, relying on a model with trillions of parameters.

Nvidia’s success has become heavily dependent on a handful of cloud computing giants: Amazon, Microsoft, Google, and Meta Platforms. These companies are pouring money into data centers and aiming to outsmart their rivals with new AI-related services.

Nvidia’s challenge is to spread its technology to more customers. Huang aims to achieve this by making it easier for businesses and governments to implement his AI systems using their own software, hardware, and services.

Huang’s speech kicks off a four-day GTC event dubbed “Woodstock” for AI developers. Some of the highlights of the presentation are listed below.

Huang concluded the event by bringing two robots on stage, which he said were trained with Nvidia’s simulation tools.

“In the future, everything that moves will be a robot,” he said.