Article Highlights | February 16, 2024

FAIR (searchable, accessible, interoperable, reusable) principles facilitate the use of large data sets by human and machine researchers.

image:

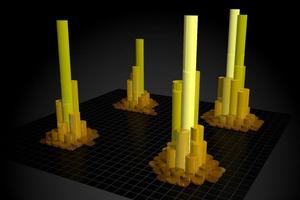

FAIR Data Principles aim to automate data management and link data sources, AI, extreme scale, and edge computing with modern scientific data infrastructure to pave the way to automate and accelerate discovery.

view more

Credit: Image courtesy of Argonne Leadership Computing Facility Visualization and Data Analytics Group

science

The Higgs boson is the fundamental particle responsible for creating the mass of all other elementary particles. Since its discovery at the CERN Large Hadron Collider in 2012, researchers have developed strategies to understand how the Higgs boson interacts with other elementary particles. Scientists are also looking for clues in this experimental data that may point to physics beyond our current understanding of nature. This science relies on the ability to extract new insights from vast experimental data sets. To help with this, researchers have defined practical FAIR (searchable, accessible, interoperable, reusable) principles for data. FAIR helps humans and computers work with large data sets. It also allows modern computers to process these datasets. This work is important for developing artificial intelligence (AI) tools that can identify new patterns and features in experimental data.

impact

This study provides a guide that allows researchers to create and assess whether datasets comply with the FAIR principles. This allows data sets to be used (and reused) by both humans and machines, and avoids the need for time-consuming manual preprocessing. It also helps researchers prepare her FAIR dataset for use in modern computing environments. If this vision becomes a reality, scientific facilities will be able to seamlessly transfer experimental data to modern computing environments, including high-performance computers. Researchers can then use the data to create new AI algorithms that provide reliable predictions and extract new knowledge.

summary

In this project, researchers developed a domain-independent step-by-step evaluation guide to assess whether a particular dataset meets the FAIR principles. They demonstrated their application using an open simulation dataset created by his CMS collaboration at the CERN Large Hadron Collider. The researchers also developed and shared tools to visualize and explore this dataset. This work has the overarching goal of providing a blueprint for the integration of datasets, AI tools, and smart cyber infrastructure. This approach leads to the creation of a rigorous AI framework for interdisciplinary discovery and innovation.

Over the next decade, as the scientific community adopts FAIR AI models and data, researchers will be able to gradually close the gap that exists between theory and experimental science. AI models, currently trained with large-scale simulations and approximate mathematical models, will gradually improve their ability to learn and describe nature, identifying principles and patterns that go beyond existing theory. Over time, AI will be able to integrate knowledge from different fields to provide a holistic understanding of natural phenomena, integrating mathematics, physics, and scientific computing to advance science.

funding

This research was supported by the Department of Energy (DOE) Office of Science, the Advanced Scientific Computing Research FAIR Data Program, and the FAIR Framework Project for Physics-Inspired Artificial Intelligence in High Energy Physics. It used resources from the Argonne Leadership Computing Facility, a DOE Office of Science user facility. One of the researchers also received support from his Halicioğlu Data Science Fellowship.